What is This “Lambda” You Speak Of?

Once you get into FP, you’ll quickly start hearing the terms “lambda” and “lambda calculus.” The goal of this chapter is to provide background information on where those names come from, and what they mean.

This chapter is mostly about the history of functional programming, so for people who don’t like history, I first share a short lesson that just explains those terms. After that, I add a full version that discusses the invention of the lambda calculus, several key people in the FP history, and languages like Lisp, Haskell, and Scala.

For those who don’t like history, this is the shortest possible “history of functional programming” I can provide that explains where the terms lambda and lambda calculus come from.

Back in the 1930s, Alonzo Church was studying mathematics at Princeton University and began using the Greek symbol λ — “lambda” — to describe ideas he had about these things called functions. Because his work preceded the development of the first electronic, general-purpose computer by at least seven years, you can imagine him writing that symbol on chalkboards to describe his concept of functions.

So, historically speaking, that’s the short story of where the term “lambda” comes from; it’s just a symbol that Mr. Church chose when he first defined the concept of a function.

Fast-forward to today, and these days the name lambda is generally used to refer to anonymous functions. That’s all it means, and it bears highlighting:

In modern functional programming, lambda means “anonymous function.”

If you’re familiar with other programming languages, you may know that Python and Ruby use the keyword lambda to define anonymous functions.

If you’re not familiar with anonymous functions, I wrote about them in the Scala Cookbook, and I also provide an overview of them in the appendices of this book.

As an aerospace engineer, I always thought the name “calculus” referred to the form of mathematics that has to do with infinitesimal changes and derivatives, but the name calculus also has a broader meaning. The word calculus can mean “a formal system,” and indeed, that’s how Wikipedia defines lambda calculus:

“Lambda calculus (also written as λ-calculus) is a formal system in mathematical logic for expressing computation based on function abstraction and application using variable binding and substitution.”

So we have:

lambda means “anonymous function,” and

calculus means “a formal system”

Therefore, the term lambda calculus refers to “a formal way to think about functions.”

That same Wikipedia link states this:

“Lambda calculus provides a theoretical framework for describing functions and their evaluation. Although it is a mathematical abstraction rather than a programming language, it forms the basis of almost all functional programming languages today.”

When I first started learning about functional programming, I found these terms to be a little intimidating, but as with most FP terms, they’re just uncommon words for talking about “functions and their evaluation.”

If you’re interested in the deeper history of FP, including a guy named Haskell Curry, the relationship between FORTRAN and FP, and languages like Lisp, Haskell, Scala, and Martin Odersky’s work that led to the creation of Scala, continue reading the next section. Otherwise feel free to move on to the next chapter.

Back in the 1930s — 80+ years ago — gasoline cost 17 cents a gallon, World War II hadn’t started yet (not until 1939, officially), the United States was in the midst of the Great Depression (1929-1939), and a man by the name of Alonzo Church was studying mathematics at Princeton University along with other legendary figures like Alan Turing (who finished his PhD under Church) and John von Neumann.

Mr. Church spent two years as a National Research Fellow and a year at Harvard, and was interested in things like mathematical and symbolic logic. In 1956 wrote a classic book titled, “Introduction to Mathematical Logic.”

In 1936 Mr. Church released his work on “lambda calculus,” and it turned out to be a very important work indeed. Think about it: How many other papers from 1936 do you know that influence life today? His biography page at www-history.mcs.st-andrews.ac.uk states:

“Church’s great discovery was lambda calculus … his remaining contributions were mainly inspired afterthoughts in the sense that most of his contributions, as well as some of his pupils’, derive from that initial achievement.”

Wikipedia previously stated that the name “lambda” was arbitrary:

“The name derives from the Greek letter lambda (λ) used to denote binding a variable in a function. The letter itself is arbitrary and has no special meaning.”

However, other research shows that the choice of the name wasn’t entirely arbitrary. After all, this is the man who founded the “Journal of Symbolic Logic,” so I personally doubted it was completely arbitrary. I imagine him drawing this symbol on chalkboards and papers in the 1930s, so my first guess was that he wanted a symbol that was easy to read and write, but fortunately you don’t have to rely on my guesswork.

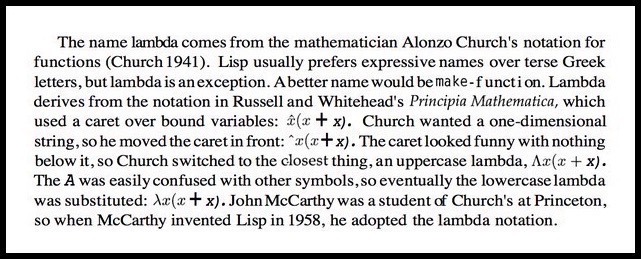

The book “Paradigms of Artificial Intelligence Programming: Case Studies in Common Lisp,” by Peter Norvig discusses the origin of the λ symbol, as shown in Figure [fig:historyLambdaPeterNorvig].

Note that Mr. Norvig also states that a better name for lambda would be make function.

As mentioned, Mr. Church introduced the world to the “lambda calculus” in 1936. On his biography page, Wikipedia describes his work like this:

“The lambda calculus emerged in his 1936 paper showing the unsolvability of the Entscheidungsproblem. This result preceded Alan Turing’s work on the halting problem, which also demonstrated the existence of a problem unsolvable by mechanical means. Church and Turing then showed that the lambda calculus and the Turing machine used in Turing’s halting problem were equivalent in capabilities, and subsequently demonstrated a variety of alternative ‘mechanical processes for computation.’ This resulted in the Church–Turing thesis.”

The Wikipedia functional programming page also states:

“Functional programming has its roots in lambda calculus, a formal system developed in the 1930s to investigate computability, the Entscheidungsproblem, function definition, function application, and recursion. Many functional programming languages can be viewed as elaborations on the lambda calculus.”

The book Becoming Functional states that lambda calculus introduced the concept of passing a function to a function. I cover this topic in the Scala Cookbook, and discuss it in several lessons in this book.

While Mr. Church’s lambda calculus was well known in its time, it’s important to note that the ENIAC — generally recognized as the world’s first electronic, general-purpose computer — wasn’t put into action until 1946, and it fit Alan Turing’s ideas better than Mr. Church’s.

The name Lisp is derived from “LISt Processor.”

But then in the 1958, MIT professor John McCarthy, a former student of Mr. Church, introduced a computer programming language named Lisp, which was “an implementation of Mr. Church’s lambda calculus that worked on von Neumann computers.”

That second Wikipedia link describes the importance of the Lisp programming language:

“Lisp was originally created as a practical mathematical notation for computer programs, influenced by the notation of Alonzo Church’s lambda calculus. It quickly became the favored programming language for artificial intelligence (AI) research. As one of the earliest programming languages, Lisp pioneered many ideas in computer science, including tree data structures, automatic storage management, dynamic typing, conditionals, higher-order functions, recursion, and the self-hosting compiler.”

That’s pretty impressive for a programming language created in the 1950s. (Beatlemania didn’t start until 1963, few people knew the Rolling Stones before 1965, and color television wouldn’t become popular in the United States until the mid-1960s.)

As a last note about Lisp, famed programmer Eric Raymond, author of The Cathedral and the Bazaar, wrote an article titled How to become a hacker, where he wrote this about Lisp:

“LISP is worth learning for a different reason — the profound enlightenment experience you will have when you finally get it. That experience will make you a better programmer for the rest of your days, even if you never actually use LISP itself a lot.”

One of the most interesting computer programming books I’ve ever come across is The Little Schemer. (Scheme is a dialect of Lisp.) The Little Schemer is written in a conversational style between a student and a teacher, where the teacher’s main goal is to get the student to think recursively and see patterns. Another book named Land of Lisp may hold the distinction as being the programming book that looks most like a cartoon. It’s another good resource, and it’s a much more complete introduction to the language than The Little Schemer.

If you happen to work with Gimp (GNU Image Manipulation Program) and want to automate your tasks, you’ll find that it supports a scripting language named Script-Fu by default. Script-Fu is a dialect of Scheme.

In 1977, John Backus won a Turing Award for his lecture, “Can Programming Be Liberated From the von Neumann Style? A Functional Style and its Algebra of Programs.” With minor edits, that link states:

“Backus later worked on a ‘function-level’ programming language known as FP … sometimes viewed as Backus’s apology for creating FORTRAN, this paper did less to garner interest in the FP language than to spark research into functional programming in general.”

The Wikipedia functional programming page adds:

“He defines functional programs as being built up in a hierarchical way by means of ‘combining forms’ that allow an ‘algebra of programs’; in modern language, this means that functional programs follow the principle of compositionality.”

(Note: I write much more about “composition” in the lessons of this book.)

I created the sketch of John Backus from his image on ibm.com.

Mr. Backus did much more than this, including his work on the ALGOL 60 programming language, and creation of the Backus-Naur Form (BNF). But in terms of functional programming, his 1977 lecture was an impetus for additional research.

Way back in 1986, I was mostly interested in playing baseball, and programmers for a company named Ericsson created a programming language named Erlang, which was influenced by Prolog. Wikipedia states), “In 1998 Ericsson announced the AXD301 switch, containing over a million lines of Erlang and reported to achieve a high availability of nine ‘9’s.’’

Erlang is not a pure functional programming language like Haskell, but it’s an actor-based, message-passing language. If you’ve heard that term before, that may be because the Akka actor library was inspired by Erlang. If you’ve used Akka, you know that one actor can’t modify the state of another actor, and in this regard, the Erlang message-passing architecture uses FP concepts.

Joe Armstrong is a famous Erlang programmer (and co-creator of the language), and in his book, Programming Erlang, he writes:

“In Erlang it’s OK to mutate state within an individual process but not for one process to tinker with the state of another process … processes interact by one method, and one method only, by exchanging messages. Processes share no data with other processes. This is the reason why we can easily distribute Erlang programs over multicores or networks.”

In that statement, Mr. Armstrong’s “processes” are equivalent to Akka actors, so the same statement can be made: “Actors share no data with other actors, and because of this we can easily distribute Akka programs over multicores or networks.” As you’ll see in the lessons to come, using only immutable values lets us say the same things about pure FP applications.

Haskell Brooks Curry (1900-1982) has the distinction of having three programming languages named after him (Haskell, Brook, and Curry). In addition to those, the process of “currying” is also named after him.

“The focus of Curry’s work were attempts to show that combinatory logic could provide a foundation for mathematics … By working in the area of Combinatory Logic for his entire career, Curry essentially became the founder and biggest name in the field … In 1947 Curry also described one of the first high-level programming languages.”

(The newspaper image of Haskell Curry comes from this catonmat.net page.)

For a brief history of the Haskell programming language, I’ll turn things over to the book, Learn You a Haskell for Great Good!:

“Haskell was made by some really smart guys (with PhDs). Work on Haskell began in 1987 when a committee of researchers got together to design a kick-ass language. In 2003 the Haskell Report was published, which defines a stable version of the language.”

For a longer and more technical account of Haskell’s history, I recommend searching for a PDF titled, “A History of Haskell: Being Lazy With Class,” by Paul Hudak, John Hughes, and Simon Peyton Jones, three of the co-creators of Haskell. In that paper you’ll learn a few more things, including that Haskell was inspired by a programming language named Miranda).

In that paper you’ll also find this quote from Virginia Curry, Haskell Curry’s wife:

“You know, Haskell actually never liked the name Haskell.”

If you want to learn Haskell I highly recommend starting with that book. Real World Haskell is another good resource.

The maturation of the Haskell language and the nearly simultaneous introduction of multicore CPUs in mainstream computing devices has been a significant driving force for the increasing popularity of FP. Because CPUs no longer double in speed every two years, they now include multiple cores and Hyper-threading technology to provide performance gains. This makes multicore (concurrent) programming important, and as luck would have it, pure functions and immutable values make concurrent programming easier.

As Joe Armstrong writes in his 2013 book Programming Erlang, “Modern processors are so fast that a single core can run four hyperthreads, so a 32-core CPU might give us an equivalent of 128 threads to play with. This means that ‘a hundred times faster’ is within striking distance. A factor of 100 does get me excited. All we have to do is write the code.”

Martin Odersky was born in 1958, and received his PH.D. under the supervision of Niklaus Wirth (who is best known as a programming language designer, including the Pascal language, and received the Turing Award in 1984).

Mr. Odersky is generally best known for creating Scala, but before that he also created a language named Pizza), then Generic Java, and the javac compiler. With a little bit of editing for conciseness, the “Scala prehistory” page on scala-lang.org states:

“In 1999, after he joined EPFL, the direction of his work changed a bit. The goal was still to combine functional and object-oriented programming, but without the restrictions imposed by Java. The first step was Funnel, a minimalist research language based on functional nets … Funnel was pleasingly pure from a language design standpoint, with very few primitive language features. Almost everything, including classes and pattern matching, would be done by libraries and encodings.”

“However, it turned out that the language was not very pleasant to use in practice. Minimalism is great for language designers but not for users … The second — and current — step is Scala, which took some of the ideas of Funnel and put them into a more pragmatic language with special focus on interoperability with standard platforms. Scala’s design was started in 2001. A first public release was done in 2003. In 2006, a second, redesigned version was released as Scala v 2.0.”

I created the sketch of Martin Odersky from the image on his Wikipedia page.

Skipping over a few important programming languages like ML) and OCaml and fast-forwarding to the here and now, in 2016 there are quite a few pure and impure functional programming languages, including Haskell, Erlang, Lisp/Scheme variants, F# (“a .NET implementation of OCaml”), Clojure (a dialect of Lisp), and of course, Scala. (My apologies to any languages I omitted.)

On their “programming languages by type” page, Wikipedia provides a list of functional programming languages that are considered “pure” and “impure.”

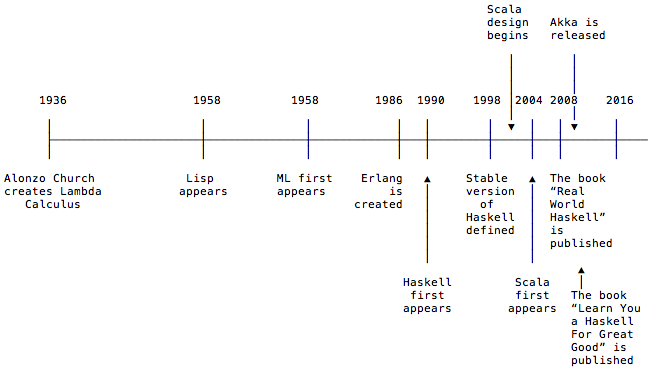

Figure [fig:timelineEventsInFpHistory] shows a rough timeline of some of the important events in the history of functional programming.

It’s important to note that Mr. Church most likely had no interest in things like (a) maintaining state over long periods of time, (b) interacting with files (reading and writing), and (c) networking. In fact, I’m pretty sure that the concept of a “file” had not been invented in the 1930s; packet-switching networks weren’t invented until the late 1960s; and DARPA didn’t adopt TCP/IP until 1983.

I mention this because while lambda calculus is important as “a theoretical framework for describing functions and their evaluation,” Mr. Church never said, “Let me tell you exactly how to work with files, GUIs, databases, web services, and maintaining state in functional applications … (followed by his solutions).”

This is important, because as mentioned, pure functional programs consist of only immutable values and pure functions. By definition, they can’t have I/O.

As an example of this problem (I/O in an FP language), the C programming language — created between 1969 and 1973 — could handle I/O, but here’s what the Wikipedia monad page) states about Haskell, I/O, and monads:

“Eugenio Moggi first described the general use of monads to structure programs in 1991. Several people built on his work … early versions of Haskell used a problematic ‘lazy list’ model for I/O, and Haskell 1.3 introduced monads as a more flexible way to combine I/O with lazy evaluation.”

When I write about monads later in this book, I like to remember that lambda calculus was invented in 1936, but monads weren’t described (invented) until 1991, and weren’t added to Haskell until version 1.3, which was released in 1998. That’s 62 years in between (a) lambda calculus and (b) monads to handle I/O in Haskell.

If you like history, Walter Isaacson’s book, The Innovators: How a Group of Hackers, Geniuses, and Geeks Created the Digital Revolution is a detailed history of the computer technology, tracing it all the way back to Ada Lovelace in the 1840s. The audiobook version of The Innovators is over 17 hours long, and I listened to it while driving across the United States in 2015, and I highly recommend it. (His biographies of Albert Einstein and Steve Jobs are also excellent.)

As I wrote the first draft of this chapter, Jamie Allen, Senior Director of Global Services for Lightbend, tweeted “When I need to break a problem into functions, thinking in LiSP helps me tremendously.”

My first exposure to the history of functional programming came in an article titled, Functional Programming for the Rest of Us

“Paradigms of Artificial Intelligence Programming: Case Studies in Common Lisp,” by Peter Norvig

“In many ways, F# is essentially a .Net implementation of OCaml”